0x02、Prompt Template

String Prompt Template

Only use to plain text

1 | # Prompt Template |

The program paradigm of Prompt Template is:

- Creating Prompt Template

- Passing params to Prompt Template, change the template to prompt

Env of this template:

- Imput of model is Plain Text

- Applicable to simple tasks

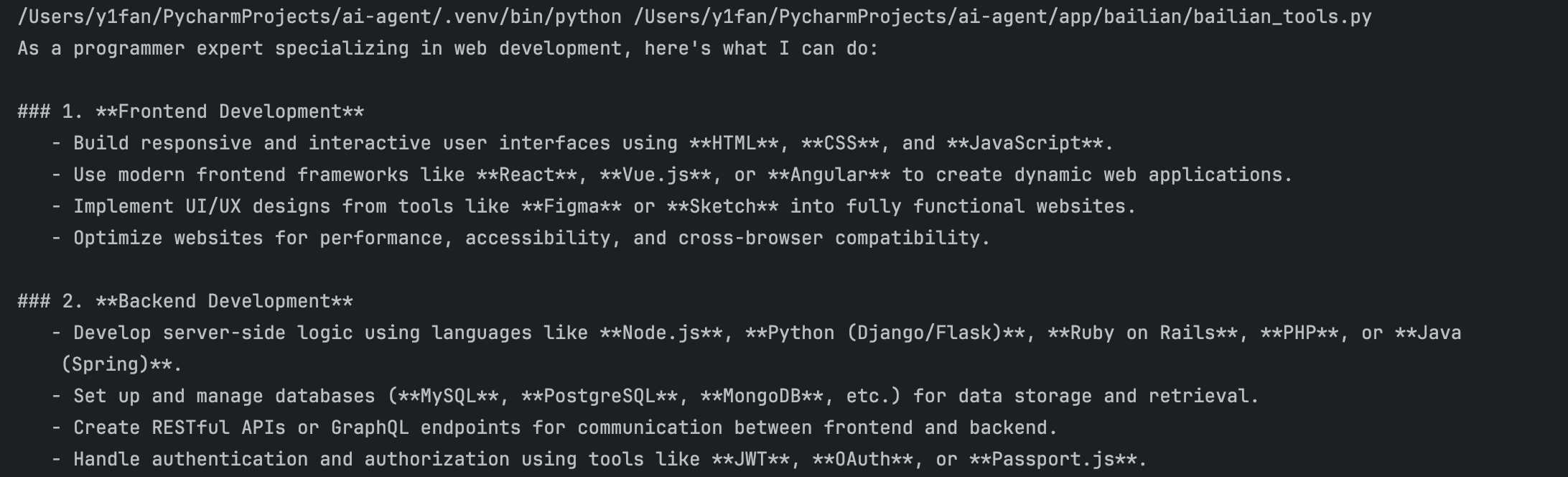

Chat Prompt Template

Use to chat task, input are message list (SystemMessage、HumanMessage、AIMessage), and simulate multi-round conversations.

1 | # Chat Prompt Template |

📌📌

In this way, the prompt template can be abstracted, but often we need to reuse message, so we can union ChatPromptTemplate and ChatMessagePromptTemplate to abstract message template.

1 | # ChatMessage Template |

Than, the chat_prompt_template should be changed

1 | chat_prompt_template = ChatPromptTemplate.from_messages([ |

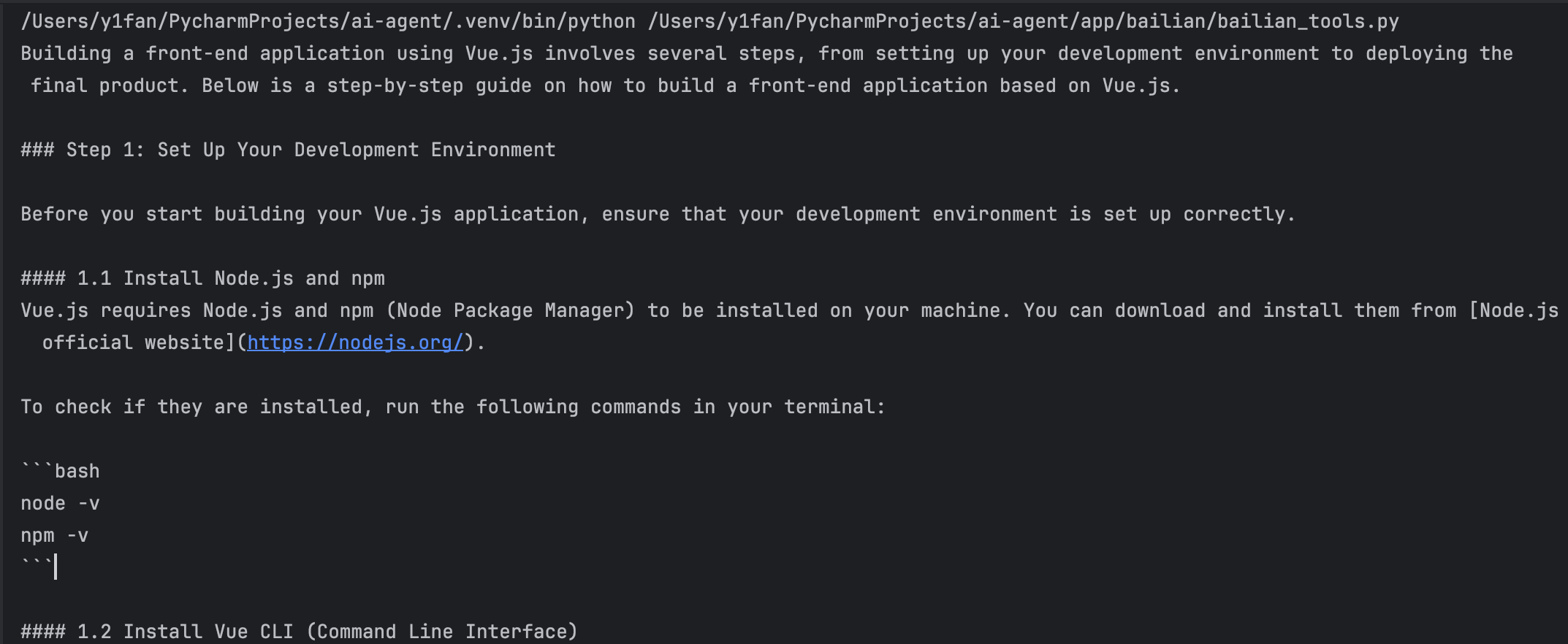

FewShot Prompt Template

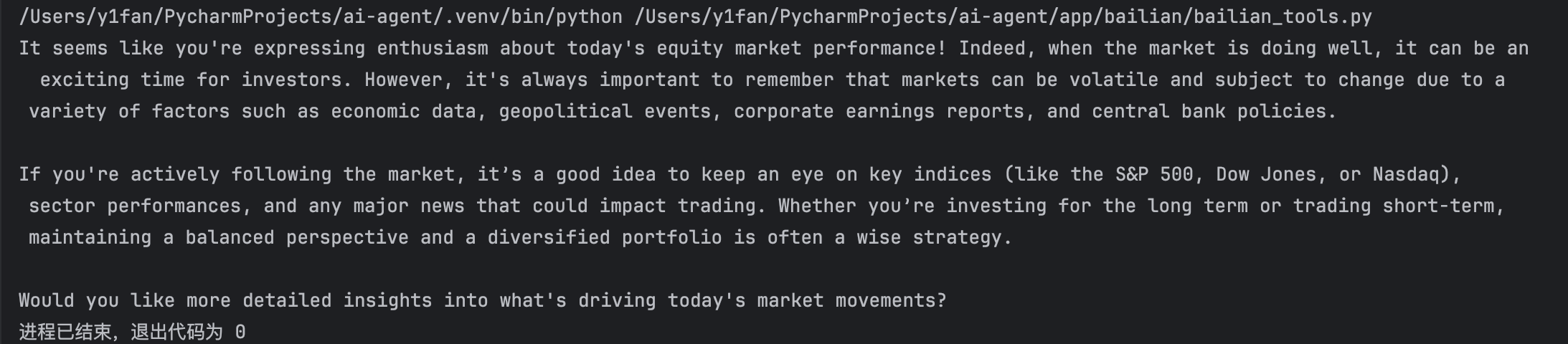

When we need complex task and need example to guide model’s action, we can use few shot learning, there are examples in prompt.

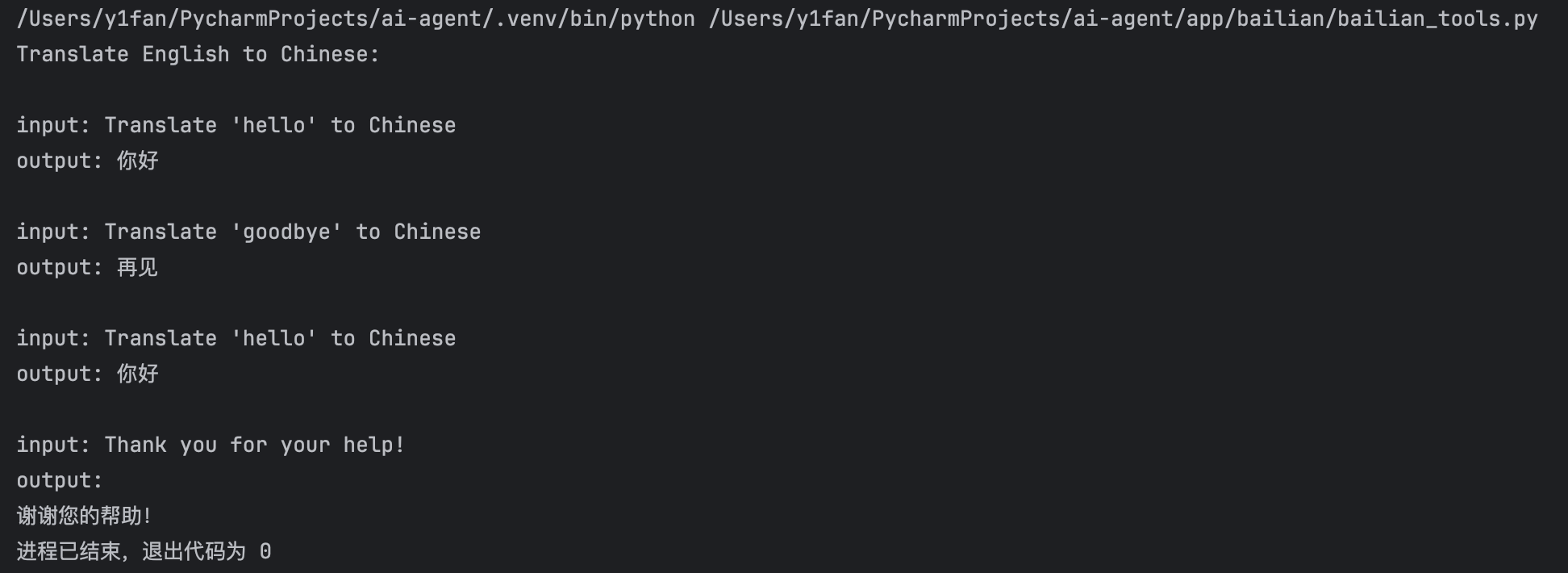

For example, we create a template named few_shot_prompt_template, there are five arguments.

1 | few_shot_prompt_template = FewShotPromptTemplate( |

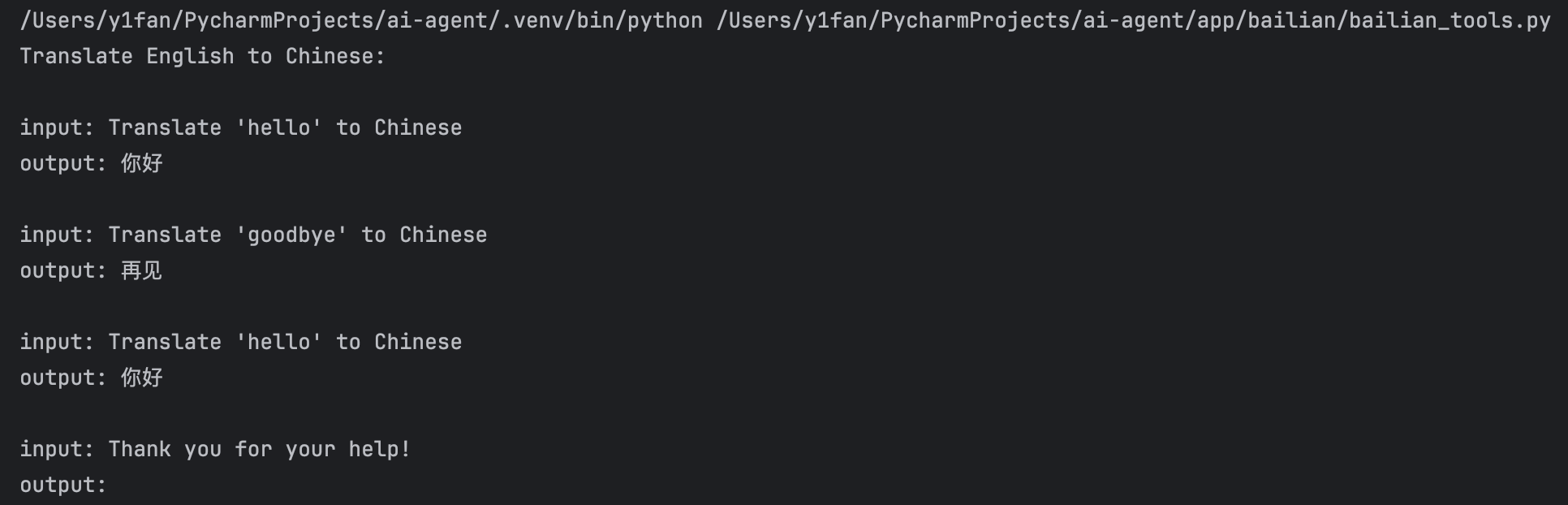

examples, the examples what we want model to input and output.1

2

3

4

5example = [

{"input": "Translate 'hello' to Chinese", "output": "你好"},

{"input": "Translate 'goodbye' to Chinese", "output": "再见"},

{"input": "Translate 'hello' to Chinese", "output": "你好"}

]example_prompt, the template of examples.1

example_template = "input: {input}\noutput: {output}"

prefix, target for this conversion.suffix, format for this conversion.input_variables, the variable of model to receive input.

Than, printing prompt_template.

1 | prompt = few_shot_prompt_template.format(text="Thank you for your help!") |

We can see the prefix and the three exampls we gave. Now, let the LLM to perform task.

1 | # Create few shot prompt template |

We can see the output of LLM is only the Chinese text like the examples.

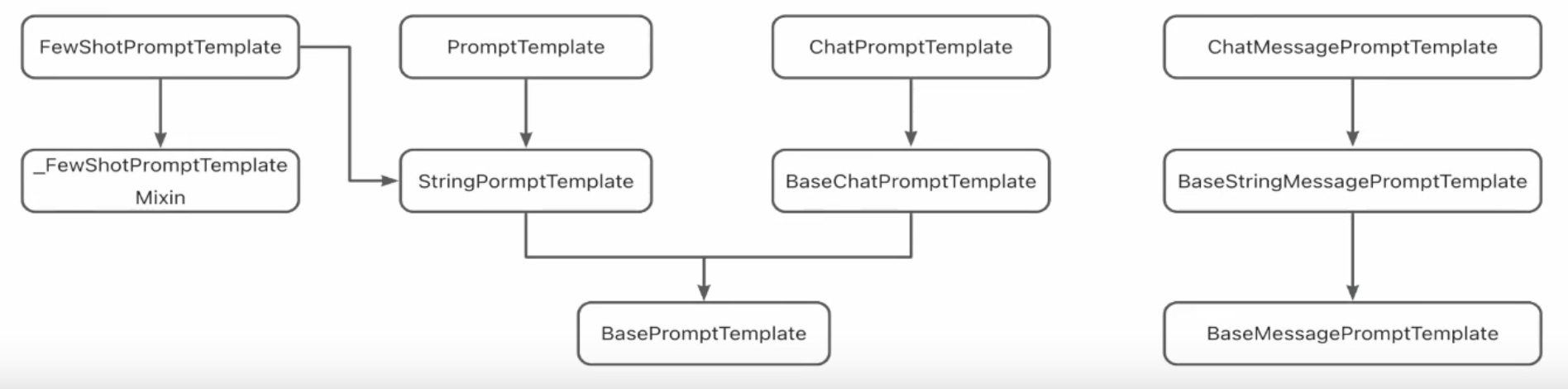

Inheritance Relationship of Common Prompt Template Classes

In short, the base prompt template has two templates, one is string template, to finish single task, the other is chat template, to finish multi-round conversions.

Furthermore, the message template is aiming at message body.